- Blog

- December 1, 2021

Data Lake Failures: How to Avoid

- Blog

- December 1, 2021

Data Lake Failures: How to Avoid

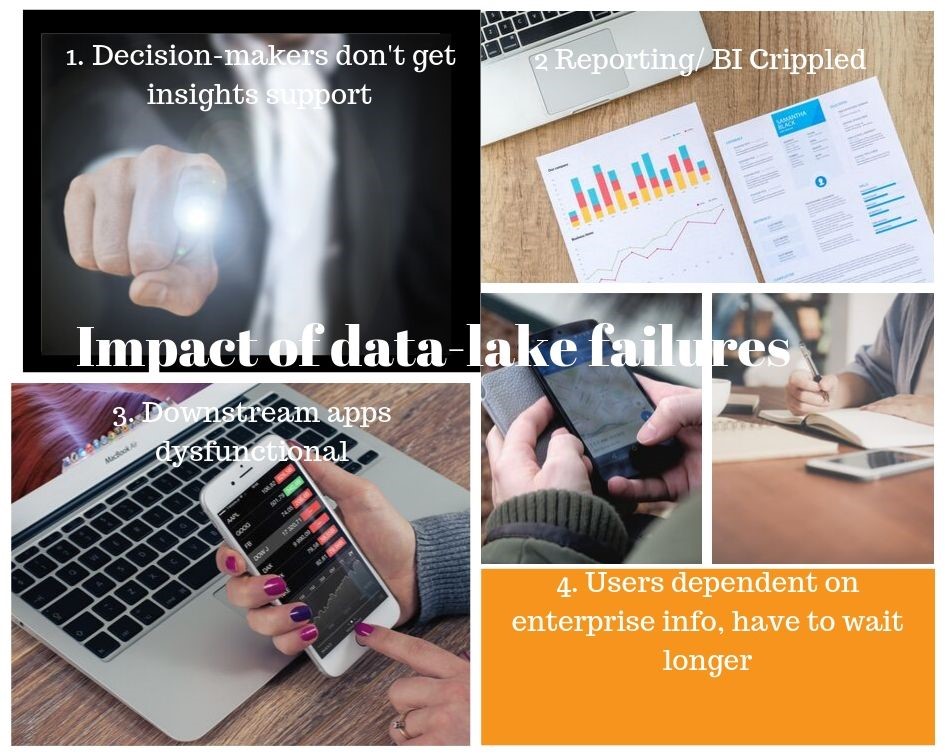

A data lake is a single source of information for business users that stores enterprise-level data that is used by various functional units. Therefore, whenever that flow of information from an enterprise data lake is interrupted, the impact across the organization can lead to costly business disruption.

Data lake failures are generally of three types:

· A data lake is completely down

· A data lake is partially available

· A data lake is frequently unavailable

Regardless of which type of failure it is, the effects of disruption to data flow are numerous.

1. Business users’ ability to function (e.g., decision-making/policymaking) is severely impacted

1. Business users’ ability to function (e.g., decision-making/policymaking) is severely impacted

2. BI / Reporting team’s quality of work and timely delivery is affected

3. Downstream business applications consuming the data are rendered ineffective

4. People don’t get the data/information they are waiting for thereby impacting their ability to operate effectively

Obviously, the end-result of all these failures and its impact on business is undesirable for an organization. From operational logjams, reduced output/performance, and customer service/delivery failure to collateral damage, investor sentiments, and dented top/bottom lines, organizations could be facing multiple challenges.

MSRcosmos believes in empowering its customers by taking proactive measures rather reacting once a data lake failure has occurred – which, of course, we can also help with.

Accordingly, we recommend to all our customers a multi-pronged approach for preventing data lake failures.

The 5-pronged data lake failure prevention strategy

Data lake failures vary in the degree/magnitude of their impact. There are numerous reasons as to why data lake failures or data flow disruptions occur. Outages could stem from various factors – e.g., user error, policies, infrastructure, lack of preparedness, (lack of) timely intervention, etc. Thus, our failure prevention strategy is closely intertwined with the various ways in which failures occur.

1. Data lake security and associated policies

Platform Access and Privileges

Granting individuals access to the data platform and the extent of their privileges under the access granted needs to be discrete and constantly monitored. This is because some users – unintentionally or willfully – play around with the data. For example, should someone accidentally delete some records/data that may affect the data flow, the results could be disastrous. Therefore, to nip such possibilities in the bud, you must keep a check on user access.

Network Isolation

A strong firewall around the enterprise network will not only make it difficult to breach but will also insulate (isolate) it from intruders.

Data Protection

Data encryption will help ensure your enterprise data is protected and safe.

Document-Level Security

Having role-based, data-level security will ensure that only authorized personnel can access documents. In addition, they will only be able to obtain those documents they have permission to access.

2. Performance evaluation and scaling

If the data flow to the intended recipients—especially the downstream applications—is delayed due to lack of ideal speed then the output/performance of those apps will be severely affected. Therefore, it is crucial to establish an optimal speed and maintain that performance via a continuous, analytics-powered performance evaluation strategy.

Next is the question of scalability, which is critically important for undertaking big data analytics. As business operations grow, it is inevitable that the data size also grows. Your data lake supporting a few terabytes of data won’t help, and will collapse in the face of extensive data pouring in. Therefore, you need to plan for an optimal number of licenses as well as a scalable infrastructure.

Big data analytics frameworks such as Hadoop and Spark are designed to scale horizontally. Thus, as the data and/or processing grows, you can simply add more nodes to your cluster. This allows for continuous and seamless processing without interruption. However, to ensure this success, you also must have linear scaling of the storage layer.

3. High Availability (HA) and Disaster Recovery (DR)

One of the quintessential steps to prevent data lake failures is to have the right HA measures. Having a spare server that gets automatically invoked should there be any issues with the master server, will greatly reduce the chances of a data lake failure.

Examples of HA approaches that can be adopted:

· Metadata HA

Metadata HA is the most helpful in cases of long-running cluster operations, as it includes critical information about the location of application data and the associated/related replicas.

· MapReduce HA

MapReduce HA is helpful with job execution even when the related trackers and resource managers go down.

· NFS HA

Another effective HA measure is to mount the cluster via a HA-enabled NFS. This ensures continuous and uninterrupted access to both the data that’s streaming-in and also the applications that require random read/write operations.

· Rolling updates

Rolling upgrades is another good measure that helps minimize disruptions. Deploying updates (components) incrementally ensures there’s no downtime. Further, by undertaking maintenance or software upgrades on the cluster—a few nodes at a time, while the system continues to run—you can eliminate planned downtime.

Another critical step towards data lake failure prevention is to have a sturdy disaster recovery (DR) setup.

Backups – Incorporate a Hadoop distribution as part of your DR strategy, which gives you the capacity to take a snapshot of a cluster at the volume-level (all the data including files and database tables). Taking the snapshot of a cluster happens instantaneously and represents a consistent view of data, as the state of the snapshot always remains the same.

Have a Converged Data Platform –

Experience shows that back-ups alone may not be enough for disaster recovery. Therefore, it is prudent to set up a converged data platform for big data disaster recovery. It will allow you to manage multiple big data clusters across several locations and infrastructure types (cloud / on-premises) irrespective of who the service provider is, thus ensuring that the data remains consistent and up-to-date between all clusters.

4. Effective data governance

Establish an effective data governance policy in terms of how the data lake is organized, what kind of recovery mechanisms are in place, and whether or not there is adherence to correct/authentic access. These will help in easy regeneration of information that may have been or can be affected.

5. Semantic Consistency

Semantic Consistency is achieved when two data units satisfy strong consistency by having the same semantic meanings and data values. In other words, a semantic layer is used to maintain meta-data that needs to be checked by downstream apps if there is any change in the data (columns) and, make the changes accordingly before starting. Therefore, it is highly advisable to have a semantic layer on top of your raw data.

We believe if these five steps are implemented properly, then is it safe to say that there could be zero or minimal data lake failures.

We have worked with dozens of customers helping them to architect an effective and highly available data lake strategy. Contact us today – we’re happy to provide feedback on your particular situation and share our best practices.

Balaji Kandregula

Lead Architect – Big-data & Analytics